AI is a UI Problem

A lot of people think that AI is all about intelligence. They believe that if the models get smarter, the Revolution will come from a massive influx of freely available intelligence on tap. Sounds plausible.

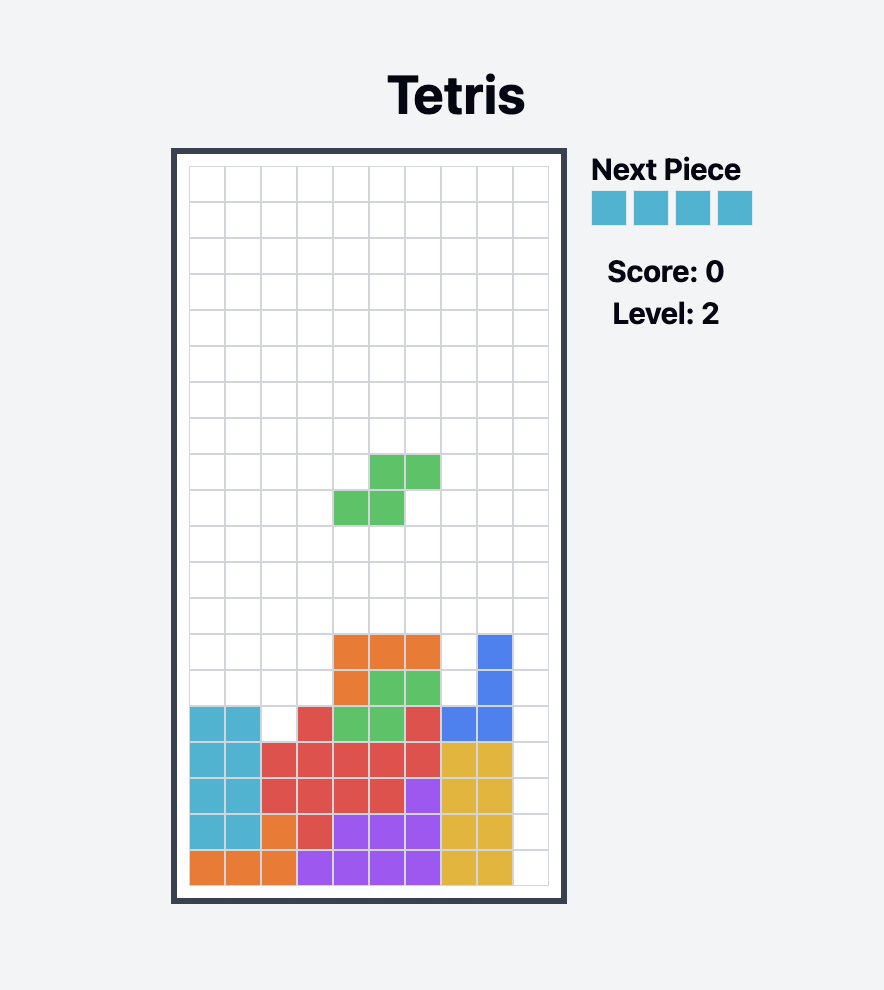

It used to take a couple hours to coax GPT-3 to produce a passable IB Literature essay, in fits and starts. The other day I built a playable version of Tetris with Claude in an “artifact.” Took me about 40 minutes.

But so what?

It means that the Revoluation in AI is currently happening in UI design and UX integration (UI is the user interface, how we interact with something; UX is the experience we have when using something). The distance between my idea and its execution is shrinking, not because the models are smarter, though they have gotten smarter over time, but because I’m interacting with them differently.

If you follow AI news, you are bombarded by “AI in XYZ” where “XYZ” is pretty much everything. AI in the search field on WhatsApp. AI in Github. AI in watches and glasses and odd little boxes and broaches. AI in e-ink poetry clocks (and if you think I haven’t bought one of those, and was tempted to buy two, and am eagerly awaing the Kickstarter shipment, think again, dear reader.) AI in schools.

Many people are trying to solve the AI-UI problem. The question is not “how much smarter will the models get?” The question is “how will we most comfortably, successfully, delightfully, and profitably interact with the models?”

Some companies are getting this right. Anthropic’s “Projects” features is a good example – you can create a foundation of knowledge, even shared among multiple users. As your project evolves and you add new documents to this foundation, the chats produce new results. Anthropic’s “artifacts”, as I already noted, are another example of a new UI that changes the nature of the tool.

Apple’s “Apple Intelligence” is rumored to be yet another UI/UX shift in which AI is fully embedded in the device and its operation, notably privacy-first and on-device-when-possible. And to be clear, when I say that the Revolution is being wrought in the world of user interfaces, I don’t mean that engineering has nothing to do with it. No doubt there is significant engineering muscle behind this new user experience. This is Apple’s point, over and over again: that UI/UX and engineering are best served as a single product. We’ll see if they’re right when it comes to AI-UI.

Some companies are getting this wrong. Google’s AI powered search results are painful and unwelcome. The failure of Humane’s “pin” and the Rabbit R1 are now legendary.

What impact will this have on schools, on young people, on teaching and learning?

A small but mighty company that I’ve interacted with over the past year, Flint, is attempting to solve the UI/UX problem for school settings. Schools can’t afford to let students loose with these powerful models and hope they’ll use them responsibly. There is evidence – shocker! – that letting an AI do your homework will result in you learning less. So Flint offers students customized interactions with AI – fun, interesting, challenging, designed by the teacher with a lot of useful assistance from the tool itself – that also allow teachers and the school administration full visibility into how students use AI. This means we can guide students to productive uses and habits. Same underlying models, better UI.

A highly aniticipated release in the AI industry is Advanced Voice Mode in ChatGPT. If you watch the demonstrations that OpenAI released a couple months ago, it’s clear that AVM achieves something new without being all that new in terms of IQ. It’s just GPT-4o with some fancy voice technology.1 The voice is real time, startlingly life-like, cloyingly positive (but also, with the addition of ScarJo’s huskiness, comfortingly alluring, which is an important aspect of the product), and, of course, smarter than you.

Again, this is not a revolutionary intelligence product – that part is old news. This is a new UI, a new way to interact.

The UI shifts happening now – from chatbots that require a lot of effort to drive, to voice and personalized device interfaces that “know” you and can “talk” to you – will be the ingredients that cause the biggest shifts in perceptions and use.

People, including children, will immediately form intimate bonds with this new creature. They will experience a heightened emotional response when conversing with it – including in the context of school or learning. (Greater emotional responses will result in deeper learning opportunities.) It will be a machine that can legitimately cajole you into doing a little more practice before you log off and fire up the Xbox. (Like, you’ll want to please it so you’ll put in a few more minutes on the math drills.) It will be a machine that can legitimately talk you through a tough situation. Again, GPT-4o can already do this, but only in the form of long paragraphs, and many young people aren’t as facile with long-form text as their elders.

It will also, of course, be yet another avenue for addiction, loneliness, and manipulation by forces beyond a young person’s control. But that’s true of all technology, so it’s not really worth covering here in greater depth than it already has been by others. Suffice to say: legitimately dangerous, like a power tool.

The main point is that, good or bad, helpful or harmful, AI is a UI problem.

The Singularity may simply happen at the point when we wake up, head to school or work with a sense of anticipation, to collaborate with our AI friends, who are so helpful, who always apologize for the confusion and thank us for pointing out their error, and who never get tired of listening to our hopes, sorrows, bad poems, dreams.

The Singularity is a really, really successful AI UI.

-

I’m sure I’m unfairly minimizing the engineering efforts that allow this to happen, but the point is that the underlying model hasn’t changed. ↩